Every program is constrained both by limitations in memory and speed. These constraints work diametrically against one another; improving upon the speed of a program normally comes at the cost of memory (or vice versa). In modern times, it is important to note that memory has become relatively inexpensive in terms of cost. As such, modern concepts of optimization revolve around reducing general time complexity.

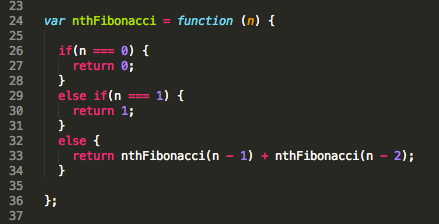

One example through which the importance of computational speed may be demonstrated is the Fibonacci algorithm. As shown above, the naive implementation of nthFibonacci rapidly decrements in algorithmic speed as its variable n increases. Only for a small numerical parameter would nthFibonacci be useful; larger parameters would take far too long to compute.

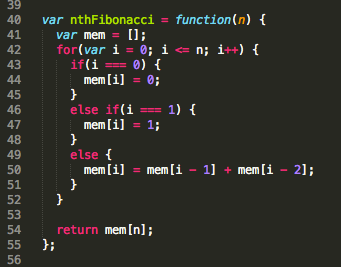

Indeed, there is another refactor of nthFibonacci that does not run into the aforementioned problems. In the algorithm listed below, we choose to store our computed values into memory so as to reduce the overall amount of computations taking place. This technique, aptly referred to as memoization, is incredibly useful when making large-scale computations and calculations (one ubiquitous example being Google Maps).